AI’s Next Bottleneck Is the Grid

Are we watching the birth of a new industrial class? Also: Meta develops a gesture-controlled wristband, and Deepmind can now decipher ancient texts

Hi! I would love your help on improving The Strange Review. Would you like to hear from us more often, or do you like a more in-depth weekly breakdown? Let us know in the poll below.

For years, we worried about the chip shortage.

Now we’re staring down something even more fundamental: electricity.

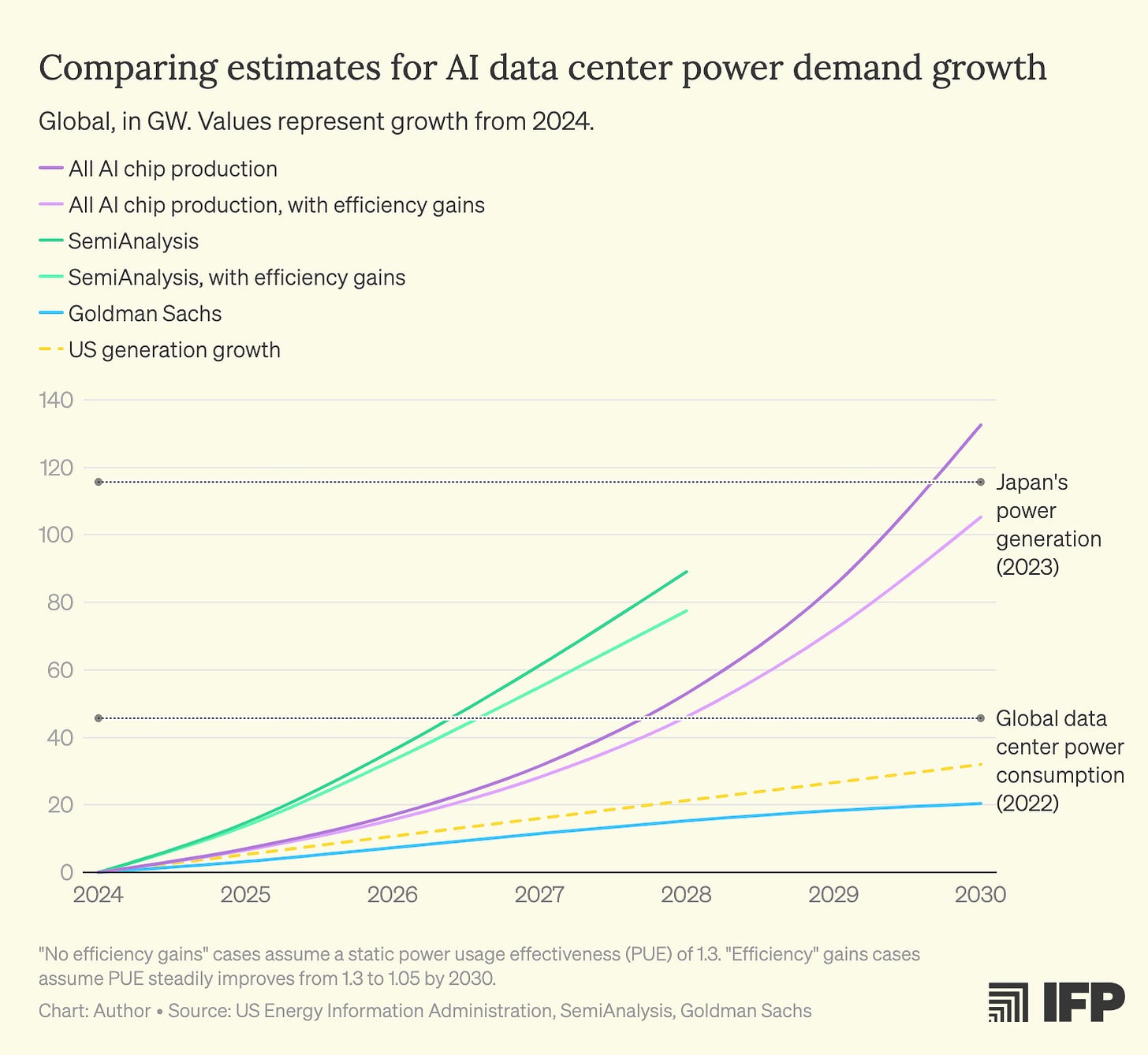

By 2028, frontier AI models will need data centers with at least 5 gigawatts of power capacity. That’s 16 - 25x more energy than today’s largest AI clusters.

But we’re already stretched thin. Today, AI data centers are operating at full throttle:

80% of their energy is currently used for training, 20% for inference. But as usage of AI products scale, that ratio is expected to flip.

And most critically: new capacity can’t come online fast enough.

Why?

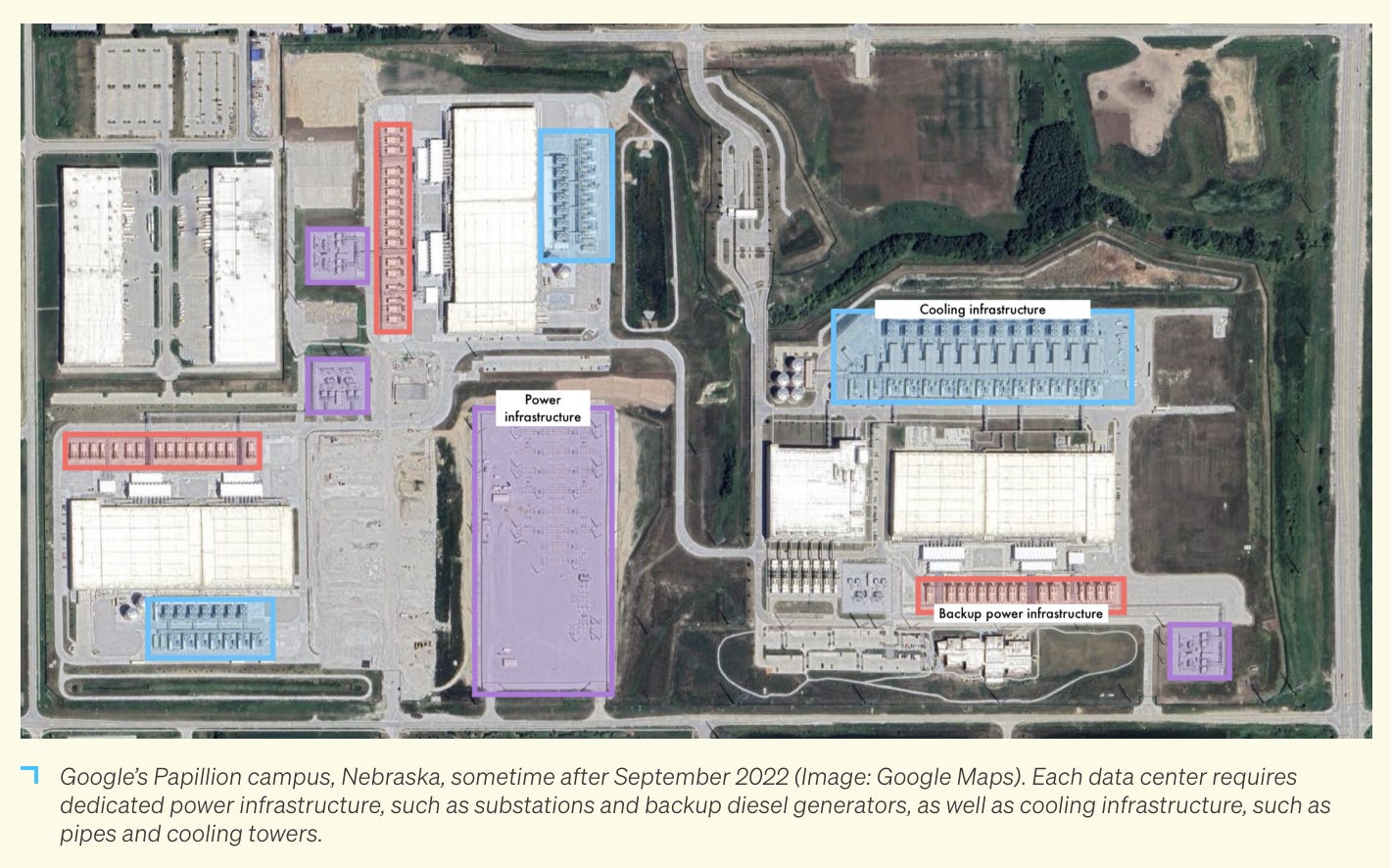

For the past 30+ years, the U.S. has been in a slow process of deindustrialization. Our power grid wasn’t built for constant, high-throughput baseload energy. It was designed for homes and offices (with peak-and-valley usage), not 24/7 AI clusters that demand a constant stream of high-voltage, always-on power. To give a sense of scale, a 100m watt data center needs roughly the power required by 75,000 homes.

In addition, key grid components like transformers are now largely made overseas. Lead times can stretch years.

Experts estimate it could take 3 to 10 years to bring new energy capacity and grid access online, because of regulatory red tape, aging infrastructure, and a lag in new generation projects.

Data is the new oil, but power is the pipeline. Will traditional utilities rise to meet this demand?

Amazon is working on powering data centers with nuclear energy

Microsoft signed a $10b deal with Brookfield for 10GW of renewable energy capacity

It makes you wonder: Are we watching the birth of a new industrial class?

Have a good weekend,

Tara

We plan to release a deeper Strange Research Report on Energy and Compute in the next few weeks, stay tuned!

Side note: FAANG has quietly become FAAMG in many circles, with Netflix dropped for sitting out the AI wave. I think this is such a missed opportunity, especially as video generation tech is accelerating fast. But that’s a deeper dive for another day.

Thank you HAUS for the great conversation on how we work with inventor-founders at Strange Ventures!

We talked about:

• Why design is a growth engine in deep tech

• How we help researchers bring inventions to market

• What makes a Strange Ventures founder

Full interview → Read on

The Download —

News that mattered this week

White House releases AI Action Plan, which outlines a path for the U.S. to lead in the AI race. The plan advocates for the deregulation of AI, and emphasizes a domestic stack for AI and compute. It also highlighted the desire to remove bureaucracy and red tape in AI adoption in enterprises as well as the build out of infrastructure.

Google unveils AI that deciphers missing Latin words in ancient Roman inscriptions: Google’s DeepMind has unveiled a new artificial intelligence tool capable of deciphering and contextualising ancient texts, including Roman-era Latin inscriptions. The new AI, named Aeneas, could be a transformative tool that can assist historians expand our understanding of the past, the tech giant said.

Meta researchers are developing a gesture-controlled wristband that can interact with a computer: Meta researchers are developing a wristband that lets people control a computer using hand gestures. This includes moving a cursor, opening apps, and sending messages by writing in the air as if using a pencil. Meta’s wristband employs a technique called surface electromyography (sEMG), which detects electrical signals generated by muscle activity to interpret user movements, as explained in a research paper published in the journal Nature.

xAI seeks $12bn in debt to fund Colossus 2 data center, with first chips online "in a few weeks": The Wall Street Journal reports that the generative AI business is working with investment firm Valor Equity Partners to secure the funds, just weeks after the company raised $10bn.

Eight months in, Swedish unicorn Lovable crosses the $100M ARR milestone: Less than a week after it became Europe’s latest unicorn, Swedish vibe coding startup Lovable is now also a centaur, a company with more than $100 million in annual recurring revenue (ARR). The startup claims it now has more than 2.3 million active users, and last reported 180,000 paying subscribers. The startup says more than 10 million projects have been created on Lovable to date.

Microsoft launched GitHub Spark, a new copilot tool that allows users to develop full-stack apps using natural language. Key features include Powered by Claude Sonnet 4 with one-click deployment, the model offers intelligent features, visual editing, and copilot agents.

Higgsfield AI introduced Steal, a new tool that allows users to recreate any image from the internet with their own touch. It works with the Higgsfield browser extension, letting users hover over and instantly rework any image they see.

Double Click —

Links to reads we found interesting

OpenAI’s new exec has a grand plan to make AI for everyone (Gizmodo)

AI summaries cause ‘devastating’ drop in audiences, online news media told (The Guardian)

Concrete that lasts centuries and captures carbon? AI just made it possible (Science Daily)

China surges ahead in AI energy race as US faces infrastructure hurdles, says Anthropic (TechEDT)

Google DeepMind’s CEO Demis Hassabis talks about the future of AI & AGI, simulating biology & physics, video games, programming, video generation, world models, Gemini 3, scaling laws, compute and more (X)