The year of inference engineering?

A new MIT paper outlines an approach to beat context rot.

Happy new year! Welcome back to the Strange Review.

We’re kicking off the new year with a brand new section: Strange Signals, a daily visual feed of the data, patterns, and breakthroughs I'm tracking. After all, the future doesn’t happen all at once.

If you’d like to just receive the weekly deep dives, you can hit the unsubscribe link and uncheck “Strange Signals” to not receive the daily / every-other-daily.

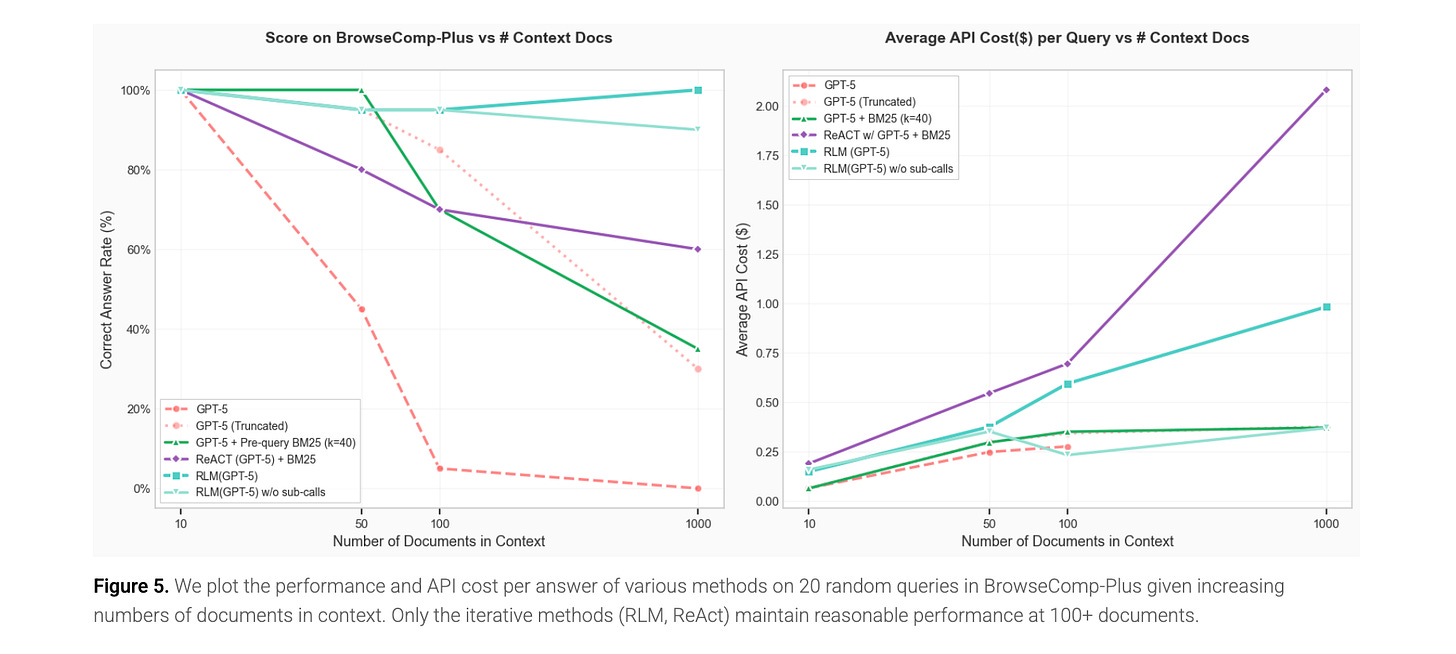

For years, the industry strategy for long context has been limited. Bigger context windows (to the point where models get confused i.e. context rot) or chunking like RAG (which can drop critical detail).

A new MIT paper proposes Recursive Language Models (RLMs), which treat the context window not as memory, but almost like an external database. The model writes its own Python code to search, filter, and self-assemble a data pipeline for every query on the fly.

Early results are promising. On massive document tasks, RLMs hit 91% accuracy vs 24% for native GPT-5. And it’s ~3x cheaper too.

2026 will lean hard into inference engineering.

Source: Alex Zhang , Prime Intellect