The Intelligence Behind Creativity

The breakthrough in Gemini 3 wasn't just more compute. It was the data infrastructure. I don’t think we’ve fully grappled with what a step change this actually is.

We often define creativity as a break from structure. Random. Pure, unbridled intuition. But if you look at the history of breakthrough ideas, from Bach’s fugues to general relativity (or ask any creative professional about their internal process), you find the opposite. True creativity is grounded in logic and self-reflexivity, which becomes a springboard for original ideas.

For years, we treated AI like the opposite of that. A dream machine. An infinite improviser that could riff on anything but couldn’t hold a stable idea. We accepted “hallucination” as the price of admission for “creativity.”

Gemini 3 changes the definition. By folding the rigorous logic of AlphaProof (their mathematical reasoning system) into the chaotic intuition of an LLM, Google/DeepMind created a system where structure and originality reinforce each other. They didn’t make the model more creative despite logic. They made it more creative because of it.

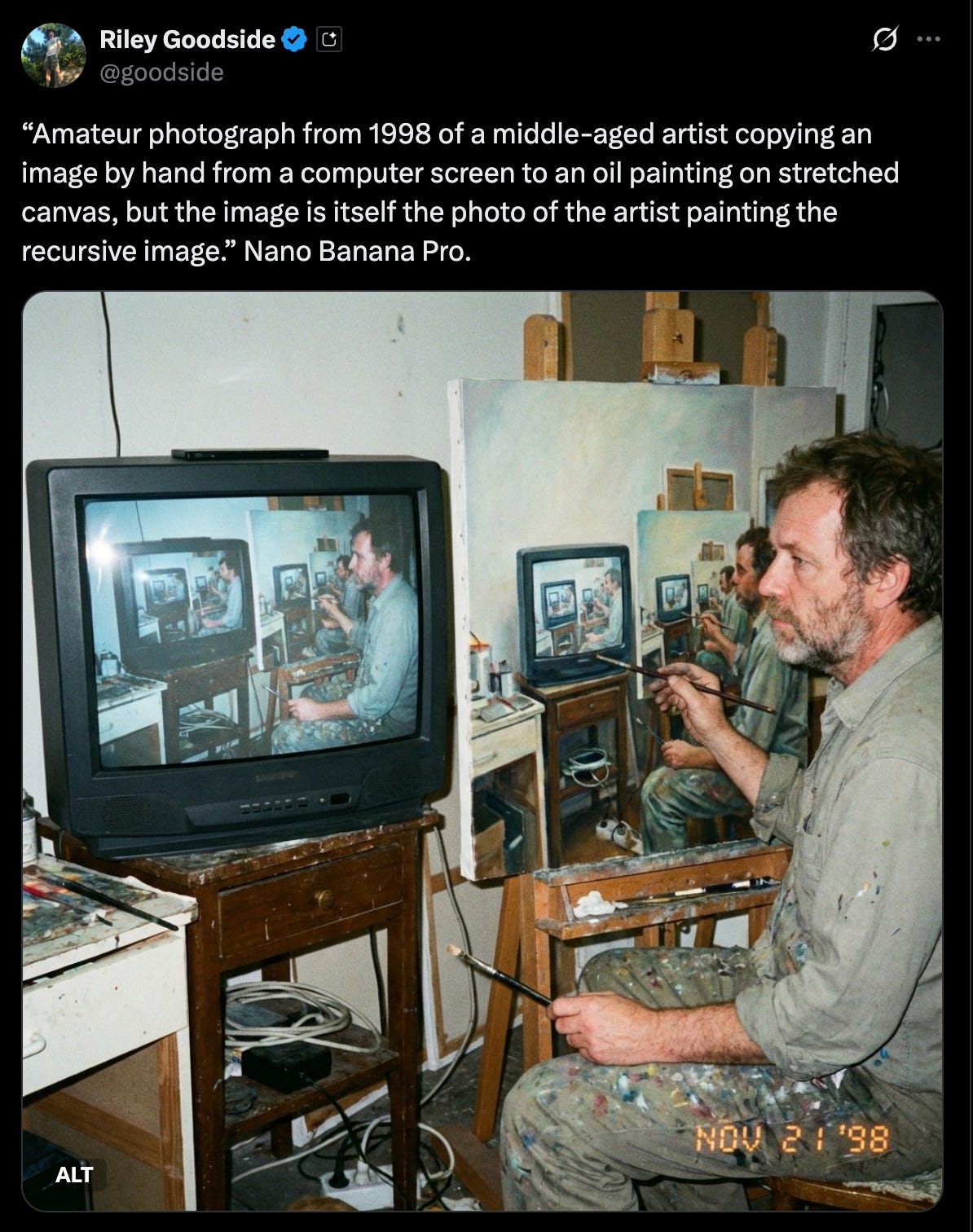

AI image generation has been a bit of a slot machine. You pulled the lever and hoped for the best. Nano Banana Pro brings visual reasoning to the table. It understands object permanence, character consistency, and, omg, legible text. (Credit: Riley Goodside)

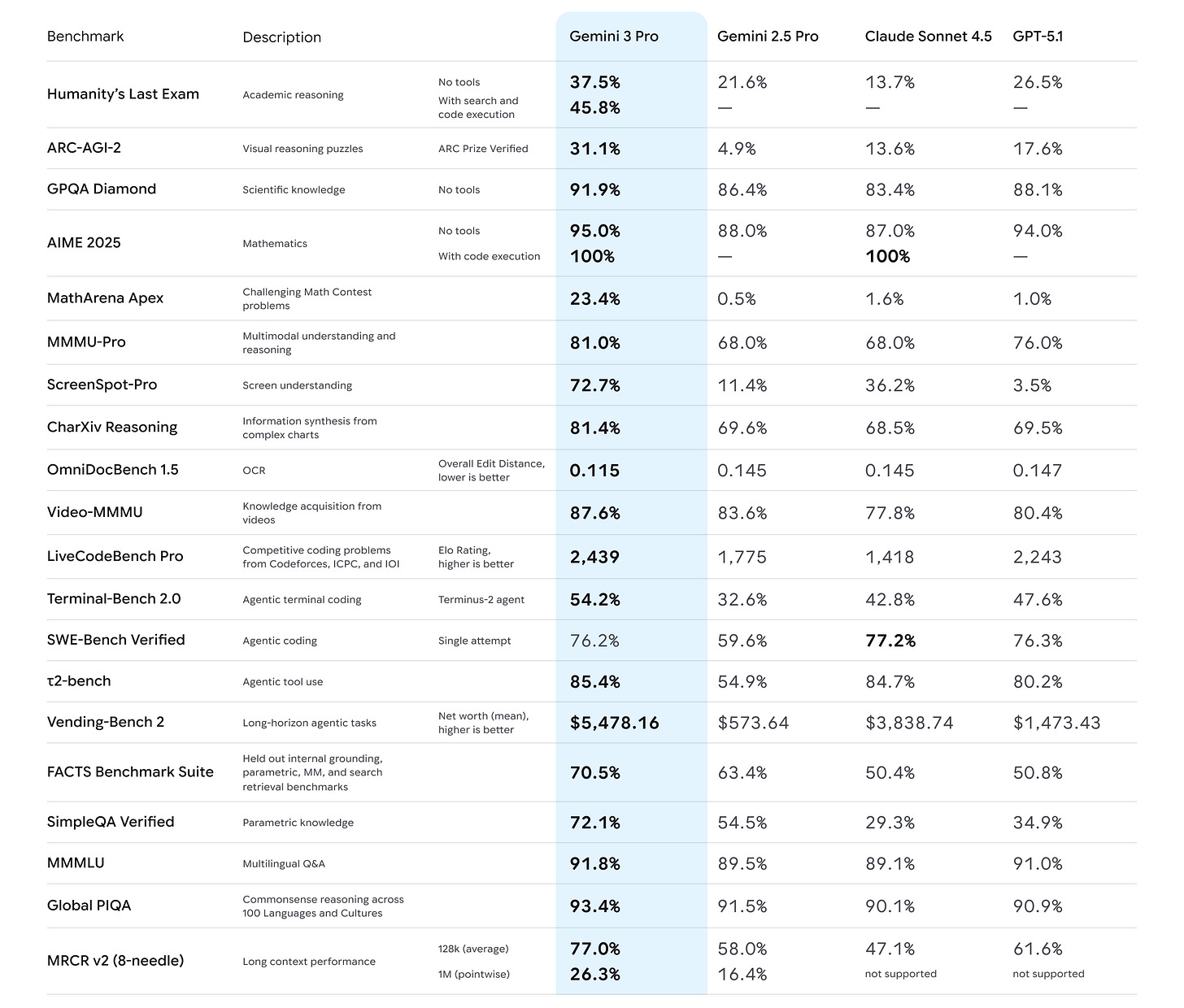

The results show up. Gemini topped every benchmark. But the real story isn’t about a model architectural breakthrough. It’s the way they trained the model: filtering, structuring, de-duping, and injecting synthetic reasoning data until the model internalized the very thing creativity needs most: a spine.

Earlier this year, concerns were voiced that brute-forcing more data into bigger models had stopped producing real gains. The returns were flattening.

It turns, the “scaling wall” that everyone worried about wasn’t a dead end.

Google’s breakthrough didn’t just come from more data, but from better data. Gemini 3 is a triumph of their mastery of data infrastructure.

They rebuilt the entire pre-training pipeline: aggressive deduplication, curriculum shaping, filtration, multimodal alignment, the kind of painstaking data engineering that most of the industry hand-waves away. That foundation alone moved the frontier.

And this loop is about to accelerate.

Not because of more compute, but because of Google’s distribution. When 3 billion Workspace and Gmail users accept a grammar suggestion in Docs, ask Gemini to create calendar events, or fix a line of code in Google AI Studio, they’re providing a high-fidelity reinforcement signal. They reveal intent. Preference. What “correct” actually feels like. (Note: they don’t train on user data e.g. what’s in your email, but user feedback on AI suggestions.)

This closes the loop between what the AI imagines and what humans validate. It turns Gemini into a system that doesn’t just model syntax or logic. It learns what people actually want.

With that feedback architecture, it’s hard to imagine Gemini not compounding into the most capable model on the planet.

And if that is true, then the most powerful AI model in the world will not be built on Nvidia GPUs.

I wrote a few months ago about why I think Nvidia made a surprising $100 billion investment into OpenAI.

In reality, it’s makes sense if you understand what they are really buying: a hedge against a future where Gemini on TPUs makes Nvidia’ obsolete.

Why OpenAI has to go full-stack and why Nvidia has to bet big on OpenAI (Sep 25, 2025)

Unlike Nvidia, Google doesn’t seem interested in selling TPUs. Likely, they will remain as rental-only cloud infrastructure. But something more interesting is happening: they’re quietly building a developer ecosystem around JAX, an open-source compiler that bridges both GPUs and TPUs.

Alongside the Gemini 3 release this week, with little fanfare, they dropped a technical blog post inviting developers deeper into the JAX AI stack. This isn’t just about hardware access - it’s about making their entire computational approach portable.

I predict that Google will double and triple down on building out their developer and builder ecosystem across the stack in the next 12 months, from applications that leverage Gemini to agentic platforms built on Antigravity (their new IDE) to TPUs.

Mind the Product Gap

My one criticism is that Google’s products are still not as world-class as their models. They have the end-to-end ecosystem across the sprawling company, but the last mile from model to product is fragmented.

A small example: I rebuilt the Strange website with their Gemini’s AI Studio. It was beautiful. Ironically, it took longer to deploy it live on a domain than to build it (just over an hour from first prompt to live on web). Google Cloud Run and the broader developer console feel like a maze compared to the brutal simplicity of Vercel. The hosting UX needs that same level of taste and restraint that’s gone into the models.

The Gemini surface area is equally confusing. There are too many doors into the same house: AI Studio, Flow, Wisk, Gemini inside Workspace, Vertex, and more coming. It feels like five hundred different teams shipping five different on-ramps to the same core capability. If Google can design that into a coherence, they’ll go from powerful to unstoppable.

This is a step change

I don’t think we’ve fully grappled with what a step change Gemini 3 actually is.

Most people won’t experience it as “state-of-the-art reasoning benchmarks.” They’ll meet it in tiny, domestic ways: chatting with Gemini to schedule their week, asking it to summarize a chaotic inbox, auto-drafting documents, generating lesson plans, debugging code, planning trips, or negotiating a calendar full of kids’ activities and work calls.

When Gemini’s reasoning shows up in Search, the mental model of “looking things up on the internet” changes. Instead of paging through blue links, you’re effectively talking to a system that has learned both the world and your intent.

On the creative side, the shift is even more profound. Gemini Nano Banana Pro doesn’t just compete with Canva and Photoshop. This is much, much bigger than that. It has fundamentally leapfrogged the act of creating and communicating.

One of my favorite workflows emerging for animation. Credit: Henry Daubrez

The creative industry will reorganize around this.

So will education. So will knowledge work. So will solo builders.

Gemini 3 is the first glimpse of a world where reasoning and imagination are ambient infrastructure, available by default to anyone with access to the Internet.

The walls are down.

Start building.

Thanks Tara, great article! I have found nano banana to be a game changer, especially in terms of subtle edits. However, I still find I get the best results when I first develop an optimised prompt in ChatGPT. That might be because I am still more familiar with that tool though...

The AlphaProof example really nails it. Combining rigoruos logic with intuitive exploraton creates something more than the sum of parts. Its not about adding compute, its about architecting the feedback loops between structre and creativity properly.