The Cognitive Flip: AI is officially more collaborator than tool

New data shows that 700M people would rather have AI think with us, than just do for us.

OpenAI just published a report on how people use ChatGPT, and the data confirms something I've been feeling: we've fundamentally changed what we want from AI.

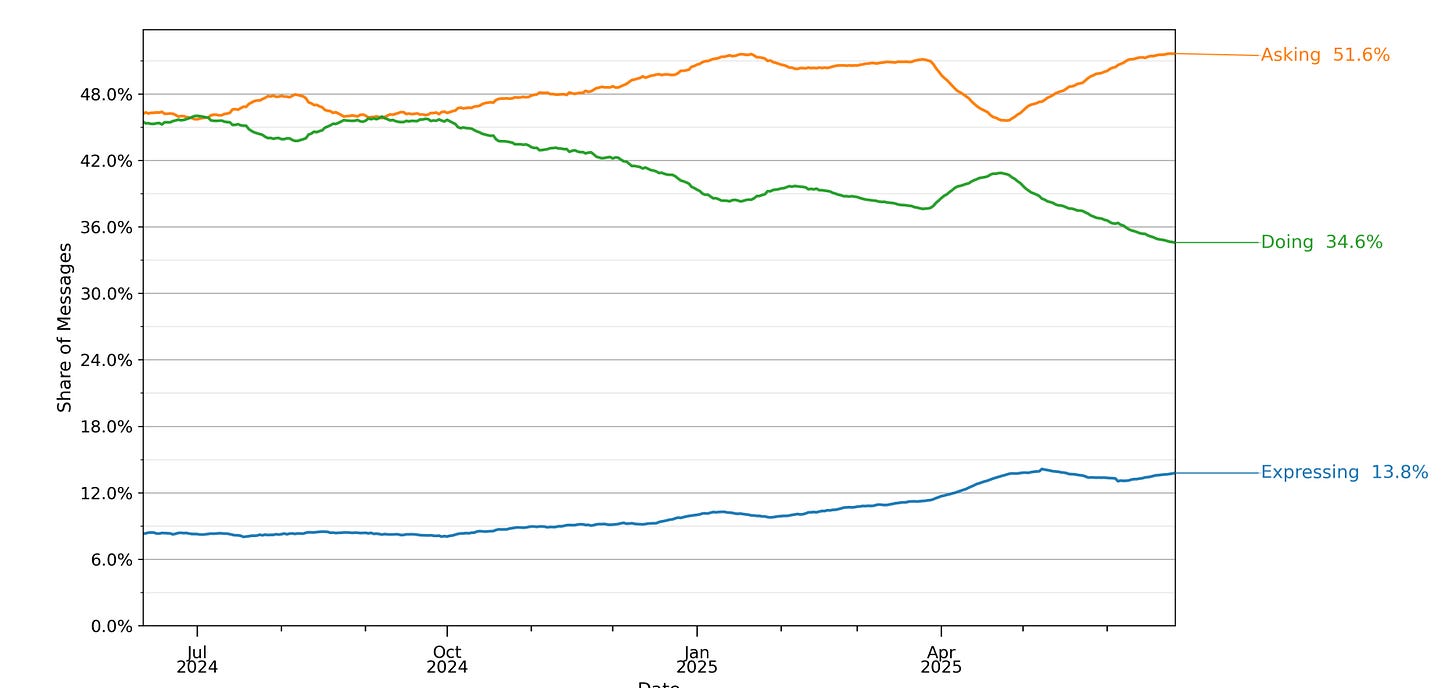

The paper classifies ChatGPT messages into three intents: Asking (seeking info or advice), Doing (task completion), and Expressing (just... talking).

Here’s what struck me.

In July 2024, usage was evenly split:

46% Asking

47% Doing.

By June 2025, something shifted:

52% Asking

only 35% Doing.

Within the past year, we’ve now shifted into asking more than doing. That's backwards from what everyone predicted.

The average ChatGPT conversation isn't “write me an email” anymore (doing). It's “what should I think about this?” (asking). OpenAI's data proves we've crossed a cognitive line we might never uncross.

AI isn’t replacing workers by automating tasks. It’s augmenting something more subtle… the cognitive overhead of decision-making. When knowledge workers become more productive, it's not because they're cranking out more widgets. It's because they're making better decisions faster. AI has become decision support infrastructure.

But there's something unsettling here. When half our interactions are asking AI for guidance, are we not just outsourcing tasks, but outsourcing taste? When we ask “make this better”, or “compare this to that”, by whose benchmarks are we benchmarking against?

In 2.5 years, ChatGPT has onboarded 700 million users. That’s 10% of humanity who are developing a new cognitive habit. We're becoming intellectually symbiotic with these models, and it’s rewiring our brains quicker than we think.

When I saw this data, I had to check my own usage. I did the math on my own usage of ChatGPT. I’ve had over 3,800 conversations with ChatGPT over 2.3 years. That's about 4.5 conversations every single day, or 75 minutes of AI collaboration daily and that's not counting Claude, Cursor, or any of the AI baked into my other tools. So let’s call it 90 to 100 minutes daily of actively choosing to think with, not through, an AI.

I talk to ChatGPT more than I talk to most of my colleagues.

Maybe the real question isn't what happens when AI can do our jobs. It's what happens when we can't imagine doing our jobs without asking AI what it thinks first. Or what we should do next.

What happens when the model becomes opinionated? When it stops being a mirror and starts being a advisor?

We're about to find out.