How I Built a Live Website in 2 Hours (and Skipped Figma)

In other news: Moar big funding rounds in AI. Tesla shows off more mockups of its Cyber Cab. Crowd seems unfazed.

Today, I decided to challenge myself: Could I go from an idea to a live website in just two hours? Spoiler alert: I did it—and here’s how.

I used:

Claude to “brainstorm” and generate initial code

Cursor AI to fix bugs, clean up code, and git commit

Vercel to deploy and connect to a domain

The process was surprisingly simple, and I skipped Figma (sorry, friends!) entirely. I did most of the prompting with natural language, going back-and-forth with the models to discuss and discard prototypes.

My initial prompt was “Code a personal website for… Keep the site minimalistic and modern, but beautifully designed, text-forward.”

The first iteration looked like a Wordpress website from 2007!

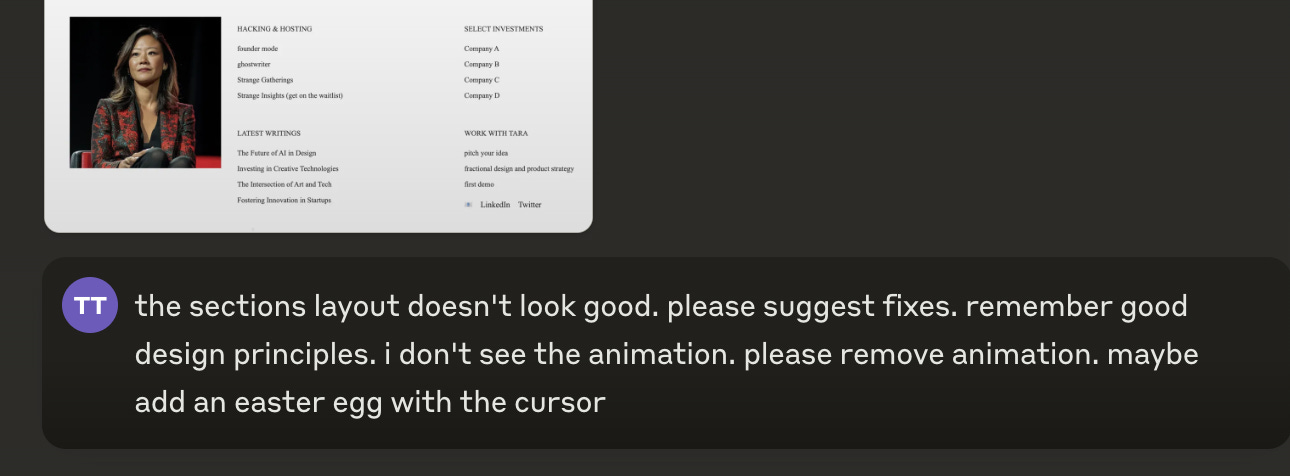

Not quite what I wanted. I then pulled up a few reference images and used more abstract, open-ended prompts to shape the direction of the design. Instead of micromanaging every visual detail, I leaned into abstract prompts like “add Easter egg design elements” (it created a tiny rocket emoji 🚀 on my cursor) or “animate it so the ripples look natural like water”. We experimented with several layouts on the fly —Claude’s canvas to preview rendered code is so nifty.

This is the final result: tara-tan.com

It’s pretty simple, vanilla HTML, but it was what I needed to revive my dormant personal website.

The session made me wonder about the second-order effects of these tools.

We need better infrastructure: While tools like Vercel and Supabase (I’m sucha. fan!) are great, we still need more user-friendly options for managing databases and deployments. The barrier to entry is lower than ever, but it could be even lower.

UI/UX creation tools need to evolve: As AI gets better at translating natural language into design and code, our tools need to keep up. Imagine a design tool that could understand and implement abstract concepts as easily as Claude did, or that could tweak designs on the fly through voice.

The styling process is still a pain: Even with Tailwind, getting things to look just right is time-consuming. We need better ways to translate design intent into code.

All said and done, this exercise reinforced my thesis that the idea-to-deployment market size is going to grow at hyperspeed. There’s a ton of design opportunities on either end of the process: i) the ideation and prototyping tools and ii) the deployment infrastructure.

Have a great weekend and happy hacking!

P.s. I’m in deep research mode around two more topics this week around UX and AI (one around prompt coaxing, and another around the r/s between user research and features engineering), so excited to share more.

The Latest

New high quality AI video generator Pyramid Flow launches – and it’s fully open source: Pyramid Flow offers high quality video clips up to 10 seconds in length – quickly, and all open source. It leverages a new technique wherein a single AI model generates video in stages, most of them low resolution, saving only a full-res version for the end of its generation process.

Gradio 5 is here – Hugging Face’s newest tool simplifies building AI-powered web apps: Hugging Face has released an innovative new Python package that allows developers to create AI-powered web apps with just a few lines of code.

Black Forest Black Forest Labs launched Flux 1.1 Pro, an enhanced text-to-image AI model 6x faster than its predecessor and outperformed competitors like Midjourney and DALL-E.

Elon Musk reveals Tesla’s autonomous taxis, the cybercab. But folks are saying it’s just all for show.

Several big ticket raises this week, with legal AI startup EvenUp hitting unicorn status, and Bret Taylor’s (former CTO of Salesforce) latest AI agent company rumored to be valued at $4B.