Google's Opal Changes the Game for Agents and Automation

xAI teases a video generation model, China releases more open source frontier models

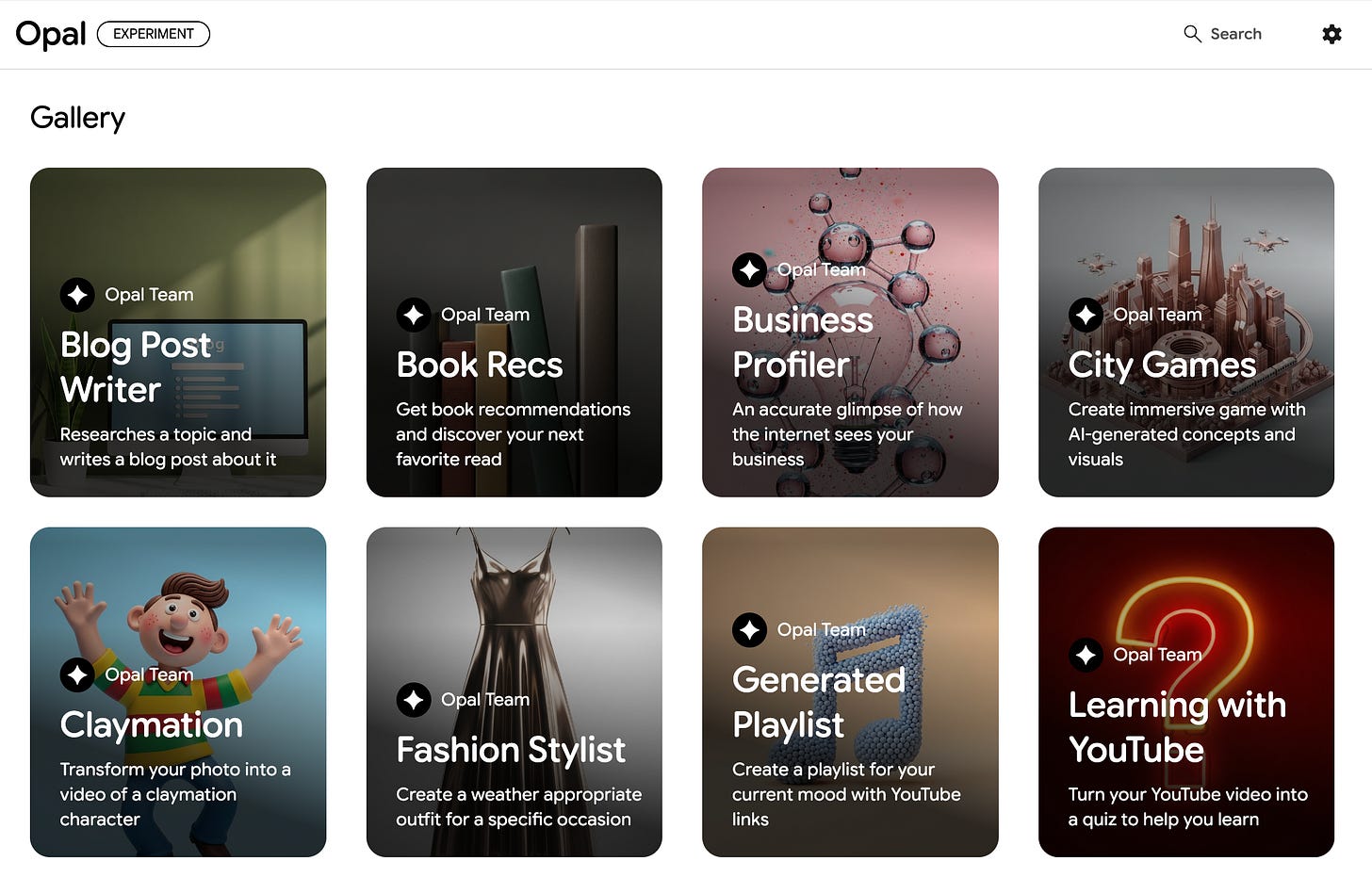

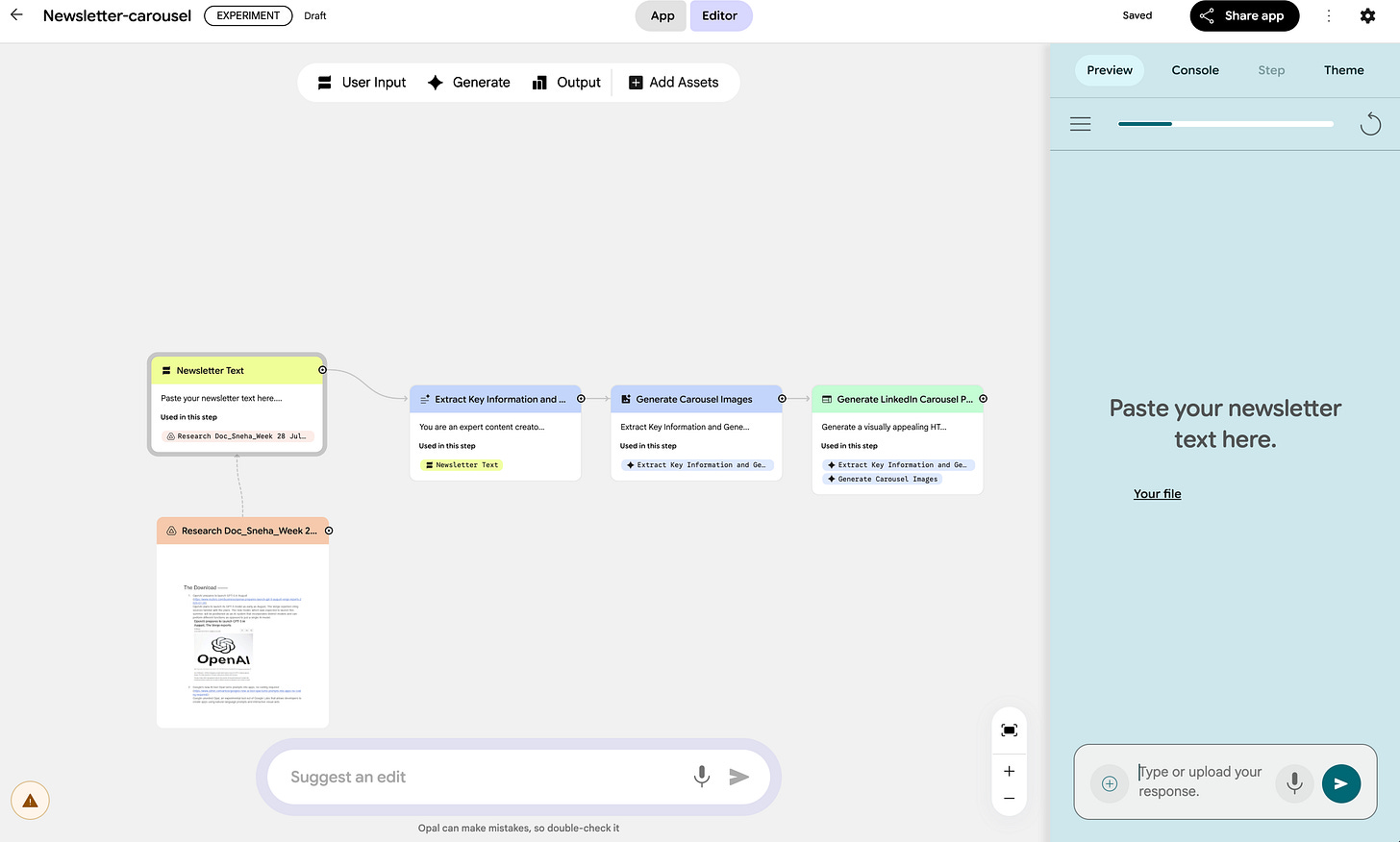

Google’s new mini apps builder, Opal, hints at a native, agentic operating system.

Opal lets you describe what you want in plain English, and then instantly builds an agentic workflow to make a mini app that can do anything.

Like:

Turn a Youtube video into a quiz

Turn a memo into Google slides

Create a playlist based on your mood

No finicky API webhooks, scripting or nodes. Just language + logic + agents

This is Google’s quiet play for the agentic OS, and it’s gunning straight for:

Zapier, the legacy no-code automation layer

n8n, Make, and Pipedream, the developer workflow orchestrators

Genspark, Manus, and the new wave of AI-native builders

You can even use Google’s latest reasoning models to plan, develop and decipher….but the real story here is how deeply it’s plugged into the Google universe.

Currently you can integrate Opal with Youtube and Google Workspace, like Drive, Docs, and Slides. But you can imagine what’s next: Gmail, Calendar, Search, maybe even Wallet.

This is a big deal. Most agentic tools today, Manus, Genspark, n8n and Zapier, still feel like duct tape. Opal could be the first one that feels native.

And then there’s the scale. It’s hard to pin down just how much of the internet runs on Alphabet products, but here’s a quick snapshot:

3 billion Workspace users

35% of global streaming via YouTube

90%+ of search volume via Google

This is agentic intelligence with data and distribution from day one.

Opal could be Google’s quietest moonshot.

If it matures into a true cross-app agent that can reason, observe, and act and build mini apps across the Google universe… Opal might not just replace low-code automation tools.

It could reshape how we use Google itself.

The Download —

Microsoft launches AI-based Copilot Mode in Edge browser (Reuters)

Microsoft launched a new "Copilot Mode" on its Edge browser that uses artificial intelligence to improve the browsing experience. Copilot Mode can help carry out tasks, organize browsing into topic-based queries and compare results across all open tabs without requiring users to switch between them, Microsoft said.

SensorLM:Learning the language of wearable sensors (Google Research)

Google introduced SensorLM, a new family of sensor–language foundation models trained on 60 million hours of data, connecting multimodal wearable sensor signals to natural language for a deeper understanding of health and activities.

Chinese startup Z.ai launches GLM-4.5 and 4.5 Air, two open-source agentic models (CNBC)

The 4.5 variant with 355B params tops open models worldwide, and ranks just behind o3 and Grok 4. Also excels at agentic tasks with a 90% success in tool use.

xAI is working on a video generation model called 'Image'(CNBC)

It is expected to come integrated with Grok with the ability to produce videos with native audio – a feature so far only seen in Google's Veo 3.

Alibaba’s upgraded Qwen3 235B Thinking 2507 is now the leading open weights model, beating DeepSeek R1 (Artificial Analysis)

Qwen3 235B 2507 (Reasoning) has jumped from the original Qwen3’s score of 62 to 69 in the Artificial Analysis Intelligence Index. This positions Qwen3 235B 2507 (Reasoning) one point above DeepSeek R1 0528.