Beyond Voice... into Multimodal Experiences.

Also: Google introduces a "thinking budget", Manus picks up $75M from Benchmark, and some fun reads for the weekend.

Happy Friday!

Back in Sep 2023, we forecasted that voice interfaces would become a key way we interact with AI (hat-tip to co-author and Strange Research Fellow Daniella Levitan).

In the 18 months since then, venture funding in voice-centric AI startups has skyrocketed. ElevenLabs, the New York-based generative speech company, was a first-mover in its uncanny text-to-speech voices. In two years, they closed a $180M Series C, valuing it north of $3B. Revenues are on track to hit an explosive $100M annually, an estimated 260% year-over-year increase.

A parade of Voice AI startups have attracted sizable rounds in the past year. San Francisco-based Vapi, which provides a platform for businesses to build custom voice agents, raised $20M in a Series A in December 2024 led by Bessemer Venture Partners. That round valued the one-year-old company at $130M, underscoring investor belief in the market.

London’s PolyAI, focused on enterprise voice assistants for call centers, secured $50M (Series C) in May 2024 at nearly a $500M valuation, with NVIDIA among its backers. Meanwhile, the Y Combinator Winter ’24 batch featured a bumper crop of voice AI startups (Olivia Moore of a16z noted “90 voice agent companies” in YC by late 2024). This influx of capital has funded everything from core speech synthesis engines to “AI agents” tackling sales calls, therapy sessions, and beyond.

HappyRobot exemplifies the wave of vertical-specific voice AI companies. They are laser-focused on supply chain and logistics communications – essentially, AI “workers” that automate the back-and-forth calls and updates needed to keep supply chains humming. It integrates with warehouse management systems, ERPs, and other enterprise tools so it can make calls or send messages to schedule shipments, update customers, and handle exceptions – all in a human-like voice.

The dollars hint at the market’s growing conviction: voice AI has evolved from clunky demos to business-critical tech.

Notably, Barclays projects the overall AI agent market (voice and text) could reach $110B by 2028, highlighting the massive runway ahead.

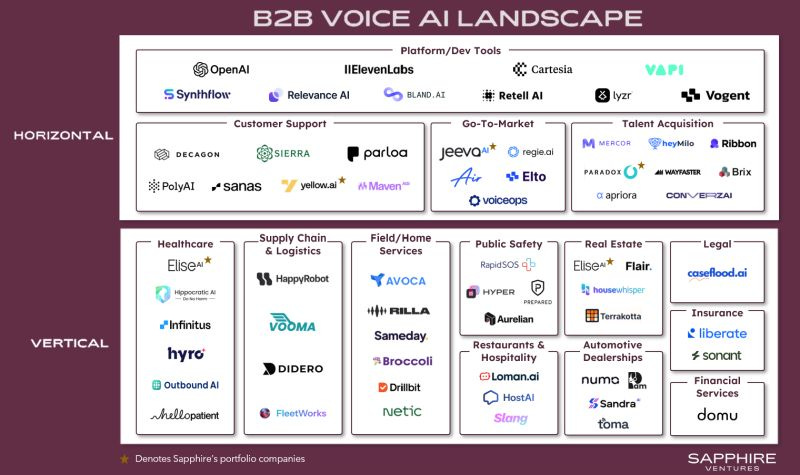

Sapphire Ventures recently released a “B2B Voice AI Landscape” market map highlighting dozens of startups, from horizontal platforms to vertical applications.

Investors are also betting that voice will become a standard interface for many software products, in the way graphical UIs or mobile apps did in prior eras.

Bessemer’s team points out that humans make “tens of billions of phone calls each day” and businesses still depend on calls to communicate complex or high-stakes information. If even a fraction of those interactions are enabled or enhanced by AI (say, AI voice assistants handling overflow calls, or AI note-takers extracting insights from sales calls), that’s a multi-billion dollar software market.

But is it moot? The Rise of Open-Source and Diverse Voice AI

Countering the capital that’s raised by these hot Voice AI companies is a plethora of open-source (read: free) projects. Sesame AI open-sourced its core voice model, CSM-1B, that can generate remarkably human-like speech (with nuances like tone, pacing, and emotion) directly from text or even from other audio.

A two-person startup by the name of Nari Labs has introduced Dia, a open model designed to produce naturalistic dialogue directly from text prompts. It supports nuanced features like emotional tone, speaker tagging, and nonverbal audio cues.

Rime AI takes an interesting approach. It’s trained on diverse voices, which make their models much more natural sounding.

Challenges and Limitations of Voice AI Today

Voice AI, for all its progress, still faces significant challenges and limitations.

Getting to Human-Level Quality – Consistently: The demo of a voice AI agent handling a call perfectly is impressive; doing it consistently at scale is hard. Achieving a truly reliable voice agent means mastering a complex stack and each layer can introduce errors. For instance, mishearing a word (speech recognition) can throw off the AI’s reply. Early voice bots often failed at this, leading to the dreaded “I’m sorry, I didn’t get that” loop.

Latency is another quality issue: if the AI takes too long to respond, the illusion breaks. Keeping response time under about one second (close to natural human conversation pace) is a real engineering challenge.

Emotional Intelligence (The “Voice Presence” Problem): Humans convey subtle emotions and emphases when we speak; replicating that is tough. Until those pieces mature, some voice interactions will feel slightly off, skating near the uncanny valley of dialogue.

Integration and Context Understanding: A voice agent doesn’t live in a vacuum – in business settings it needs to tie into databases, calendars, transaction systems, etc., to be useful. Building these integrations for an AI agent is non-trivial. These agentic systems must retrieve info and make decisions fast enough to keep conversation flow. There’s also the challenge of memory: humans remember context from earlier in a call, and customers expect the AI to as well.

In 2025, we’re at the “awkward teenager” stage of Voice AI – clearly full of potential, already surprisingly capable in some areas, but also prone to the occasional crack in the voice.

Beyond Voice

Today, we’re seeing a a similar trend in its nascent stages of breaking out: multimodal, responsive interactions. Rather than just voice, the future interfaces are able not only to talk, but also to see, listen, and understand context simultaneously.

The major model makers like Google, OpenAI, and Meta have started releasing foundational models that can infer through multimodal settings. As investors and builders, we’re on the lookout for the next wave of founders who can harness this new capability and turn it into an experience.

OpenAI makes gpt-image-1 available to developers via API: The image generator, which launched for most ChatGPT users in late March, went viral for its ability to create realistic Ghibli-style photos and “AI action figures. A natively multimodal model, gpt-image-1 can create images across different styles, follow custom guidelines, and render text.

Anthropic analyzed 700,000 Claude conversations and found that its models align with company values: The study examined 700,000 anonymized conversations, finding that Claude largely upholds the company’s “helpful, honest, harmless” framework while adapting its values to different contexts, from relationship advice to historical analysis. This represents one of the most ambitious attempts to empirically evaluate whether an AI system’s behavior in the wild matches its intended design.

A new, open source text-to-speech model called Dia has arrived to challenge ElevenLabs, OpenAI and more: A two-person startup by the name of Nari Labs has introduced Dia, a 1.6 billion parameter text-to-speech (TTS) model designed to produce naturalistic dialogue directly from text prompts. Dia supports nuanced features like emotional tone, speaker tagging, and nonverbal audio cues – all from plain text.

Google introduces “a thinking budget” in Gemini 2.5 Flash: This control mechanism allows developers to limit how much processing power the system expends on problem-solving. This “thinking budget” feature responds to a growing industry challenge: advanced AI models frequently overanalyse straightforward queries, consuming unnecessary computational resources.

OpenAI’s image generation model is now in Higgsfield. Now users can animate images with cinematic camera motion in seconds.

Lovable 2.0 introduces multiplayer vibe coding with workspaces, teams up to 2 or 20 (depending on your subscription tier) can collaborate on projects.

ElevenLabs’ new Agent-to-Agent feature enables handoffs between conversational AI agents in a workflow.

Manus AI, the general agent platform, picks up $75M from Benchmark.

Anthropic’s Dario Amodei writes an op-ed on The Urgency of Interpretability (Link)

Welcome to the Era of Experience from Google’s David Silver (Link)

Deepmind’s Demis Hassabis says that today's AI lacks consciousness, but self-awareness could emerge "implicitly." (Link)

Andrej Karpathy talks about his rhythms for vibe coding (Link)

Netflix Blog: A Foundational Model for Personalized Recommendations (Link)

Thailand introduced the first AI Police Cyborg. Welp what could go wrong. (Link)

Why do AI company logos look like buttholes? (Link)