An Image Paints a Thousand Words

Can Vision Tokens Solve AI's Memory Problem? Digging into the latest paper from Deepseek.

When you speed reading, you don’t vocalize every word. You chunk information, processing phrases and sentences as units rather than individual letters. Your brain compresses “the quick brown fox” into one semantic unit instead of sixteen characters.

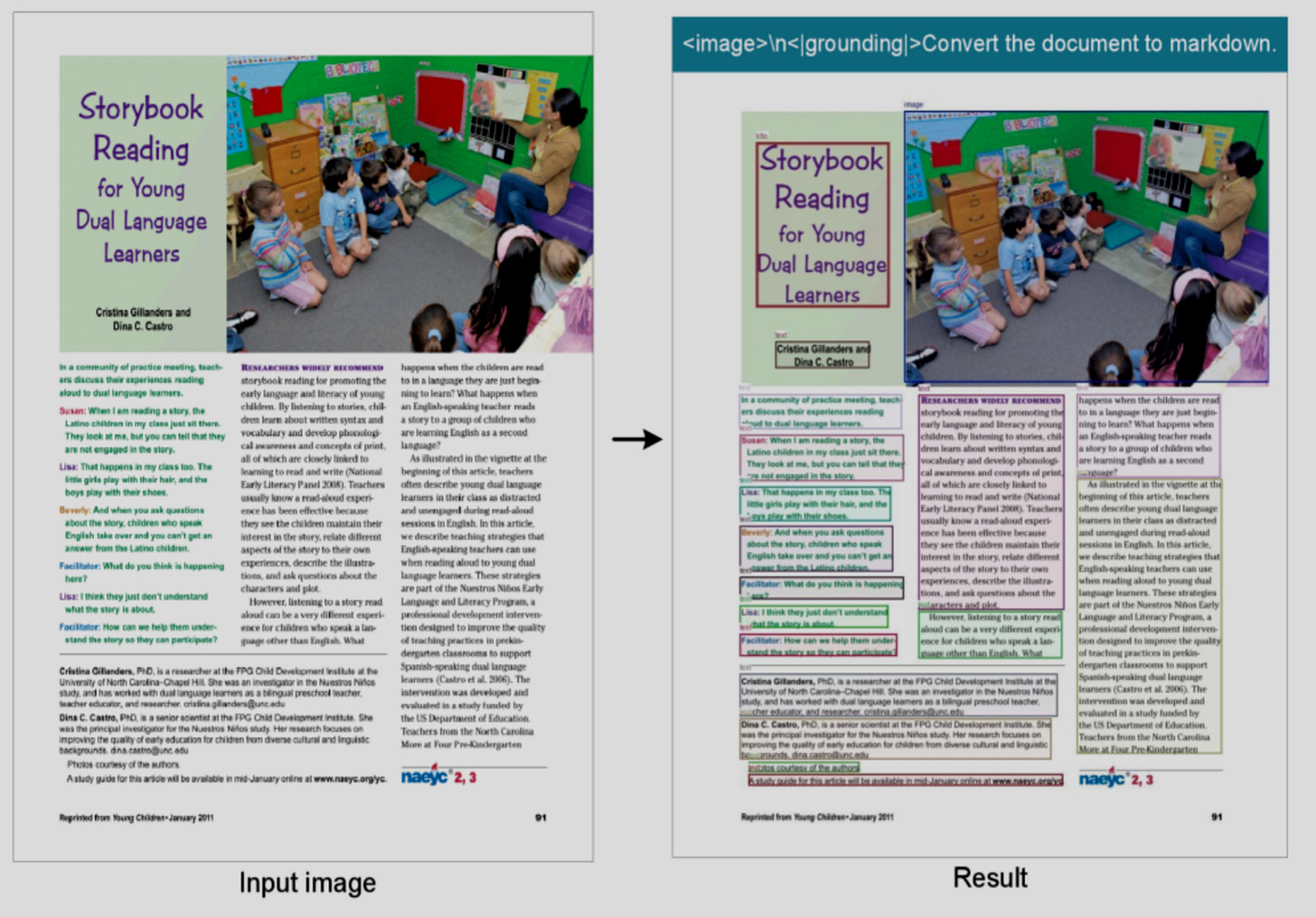

DeepSeek’s new paper proposes something similar for language models: compress a document page (normally 2,500 text tokens) into 256 visual tokens that preserve both content and spatial structure. This could solve the quadratic scaling problem. Transformer attention costs scale with the square of sequence length, so cutting tokens 10× reduces compute 100×.

I wonder if the Chinese AI lab’s approach stems from something fundamental about how Mandarin encodes information (time to brush up on my rusty Mandarin skills!). Consider the phrase 卧虎藏龙 (wò hǔ cáng lóng) or ”crouching tiger, hidden dragon.” Four characters, 49 brush strokes, packed with metaphorical imagery.

The English equivalent needs roughly 40 words: “This contains extraordinary people with exceptional abilities who choose not to reveal their talents, hiding formidable skills beneath ordinary appearances, like a tiger waiting to pounce or a dragon coiled in the depths, suggesting you should never underestimate anyone based on surface impressions.”

Chinese characters carry semantic density that sequential English text has to spell out explicitly. Maybe that’s why a Chinese lab landed on rendering text as images to compress it.

But here’s what the paper doesn’t answer: Can AI models actually read this compressed visual context back? Or must they decode everything to text first? That distinction determines whether this is an architectural breakthrough or an elegant compression method with limited practical use.

From their conclusion (page 19):

“OCR alone is insufficient to fully validate true context optical compression and we will conduct digital-optical text interleaved pretraining, needle-in-a-haystack testing, and other evaluations in the future.”

Translation: “We’ve built the compression engine. We don’t yet know if LLMs can use it without decompressing first.”

Managing Memory: Forgetting by Design

DeepSeek-OCR proposes using progressive downsampling as a strategy to manage memory.

Recent context: Full text (high fidelity, expensive)

Medium-old: High-res visual (256 tokens/page, ~95% fidelity)

Old: Low-res visual (64 tokens/page, ~85% fidelity)

Ancient: Ultra-compressed (16 tokens/page, gist only)

Store more memory by keeping older or less relevant information at “lower resolution”…not unlike how human memory works. Recent conversations crystal clear, things from six months ago fuzzy, ancient history mostly gone.

The Promise: Potential Applications

1. AI Companions with Real Memory

Persistent memory with natural decay. The AI equivalent of “I remember we discussed this, though I’ve forgotten some details.”

You return after three months. The AI remembers your ongoing projects, communication style, past discussions, not perfectly, but the way a human colleague would.

2. Company-Wide Knowledge Bases

Current approaches are broken:

Full text retrieval: Limited to ~10 documents per query

RAG: Retrieves isolated chunks from a few documents, can’t synthesize patterns across dozens of full documents

Summarization: Lossy, throws away details

With optical compression, thousands of company documents could fit in a single context window. The model sees everything simultaneously, enabling the kind of cross-document pattern recognition that currently requires human analysts.

3. Multilingual + Multimodal Processing

DeepSeek-OCR supports nearly 100 languages and can process charts, diagrams, formulas alongside text in the same compressed representation. Where current models need separate processing for text versus visuals, optical compression unifies them.

Imagine, for example, a research assistant reading papers in Mandarin, German, and English, extracting insights from both text and charts.

In the paper, DeepSeek-OCR claims to be able to process 200,000+ pages per day on a single A100, handling complex documents (tables, formulas, charts) in a single unified model rather than orchestrating multiple specialized pipelines.

If this works, Deepseek-OCR could be good enough to generate training data for other models without human labeling.

The Economics of Open Source

Is Silicon Valley overcapitalization its very Achilles heel? Airbnb recently revealed they’ve switched from OpenAI to open-source Qwen from Alibaba for most workloads, citing cost as the primary driver.

For companies processing millions of queries daily, self-hosting open-source models can reduce inference costs by an order of magnitude… potentially millions in annual savings.

As inference costs dominate AI budgets, companies are pulling every lever: smaller models, cheaper hosting, token optimization. Optical compression fits this trend: 10× fewer tokens theoretically means 10× lower inference cost (if it works).

The uncomfortable question: Are Silicon Valley’s frontier labs over-capitalized to the point where they can’t innovate on efficiency? When you have billions in funding, why optimize for cost?

Constraint breeds creativity. Airbnb needs cheap inference, adopts Qwen. Chinese labs building for resource-constrained markets need compression, build DeepSeek-OCR.

Maybe the next wave of AI innovation doesn’t come from bigger models, but from companies forced to make smaller models work better.