A Free Model That Outperforms OpenAI?

Why open source wins with operating companies: data moats > model moats

Moonshot AI (one of China’s “Six AI Tigers”) just released Kimi-K2 Thinking, an open-source model that claims to outperform GPT-5 Pro on difficult benchmarks. A few months earlier, Meituan (China’s food-delivery giant like Doordash, better known for delivering noodles on scooters) open-sourced LongCat-Flash, a frontier-class model rivaling Google’s best systems. Both gave their models away for free.

This is a signal of where the AI landscape is heading: intelligence is no longer confined to research labs. It’s emerging from operating companies that sit on millions of daily transactions and terabytes of behavioral data that no frontier lab can access.

Operating Companies Build Different Moats

Frontier labs build better general models. But operating companies build better specific models. The main difference is data access.

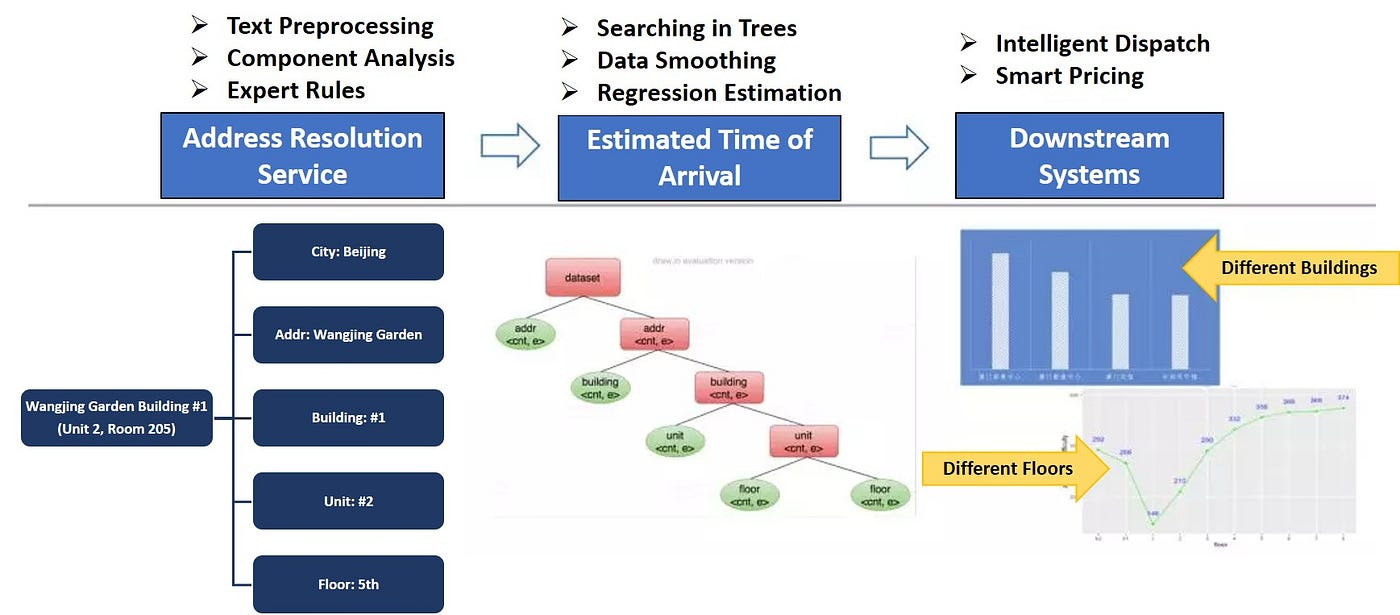

Meituan open-sources its SOTA base model but runs a proprietary version internally, trained on millions of daily delivery transactions. That combination (open weights plus private data) creates a flywheel that closed-source model providers can’t replicate. Better routing predictions improve delivery times. Better delivery times generate more orders. More orders create more training data. The model compounds with every transaction.

The economics are stark. For companies running tens of millions of inferences monthly, self-hosting open-source models can cost 100-500x less than frontier APIs. Add near-zero latency (20-50ms locally vs. 200-500ms via API), data privacy, and customization, and the calculation is clear: build on APIs and lose margin, or self-host and gain control.

Airbnb runs Alibaba’s open source model Qwen in production for exactly these reasons. Any operational company with high-volume, latency-sensitive workloads, like a DoorDash, Uber, Booking.com, faces the same trade-off.

Three Strategies In the Frontier Model Race

The frontier-model race is moving in different directions, with companies taking on different strategies: high burn, high trust, and high efficiency.

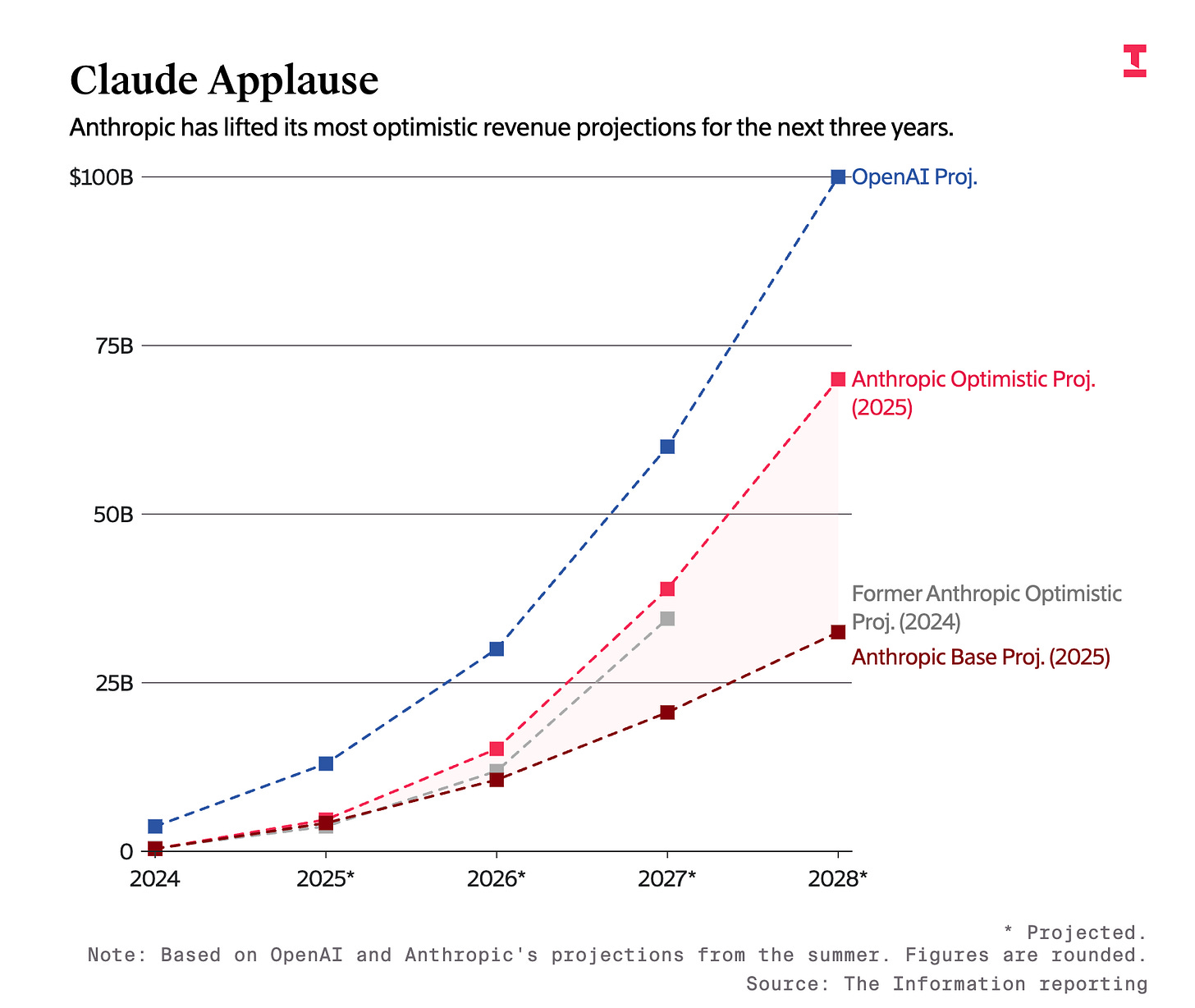

OpenAI is burning toward AGI. $13 billion in 2024 revenue, roughly $8 billion in training spend, with $1 trillion in compute commitments over the coming years.

The bet: AGI is winner-take-all, and near-term losses are the sacrifice to be made for long-term dominance.

However, consumer subscriptions ($20/month) barely cover inference costs ($10-15/user/month), and without high-margin enterprise revenue, the burn continues.

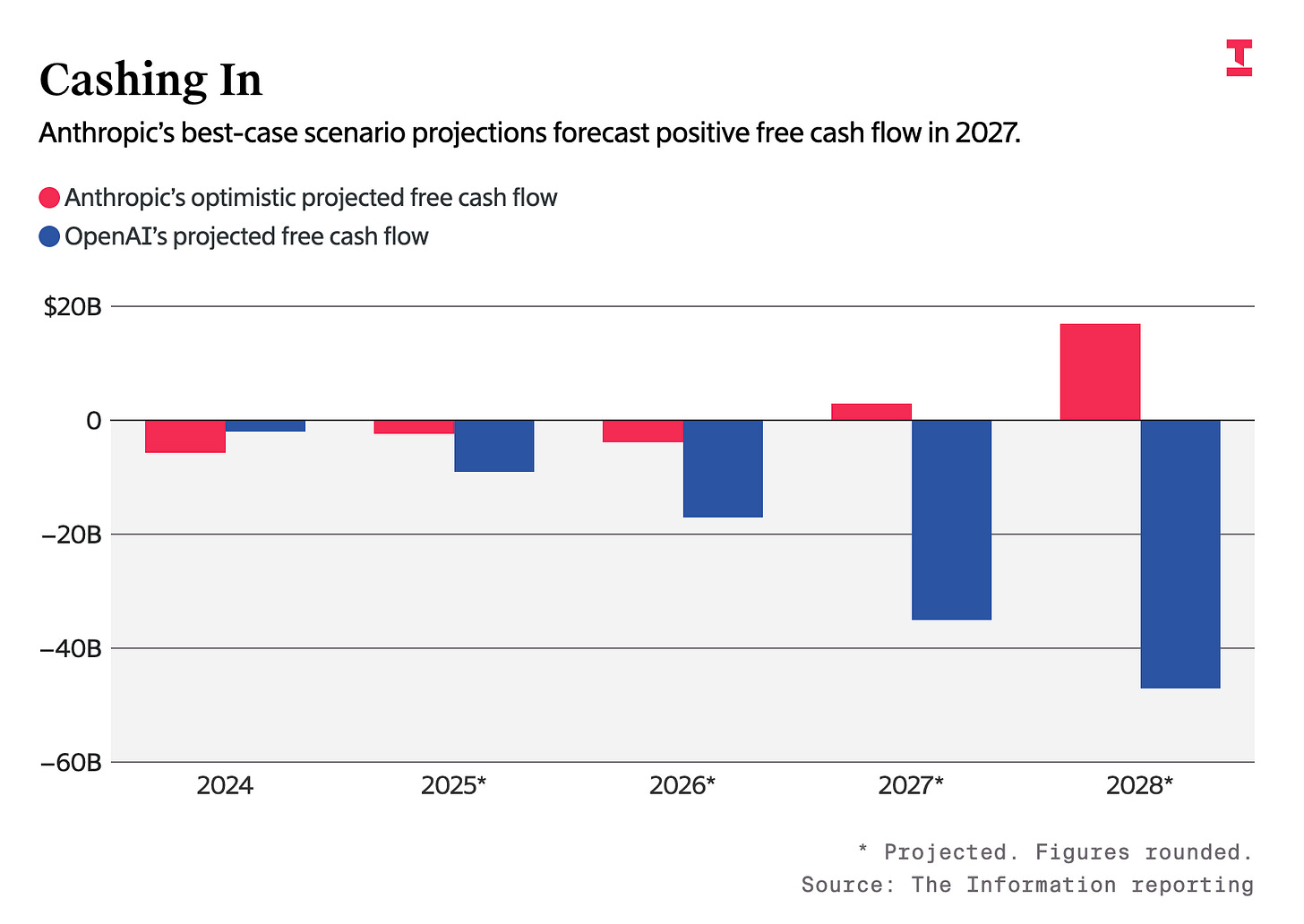

Anthropic, by contrast, is selling reliability to enterprises. They’ve surpassed OpenAI in enterprise adoption, leading enterprise LLM API usage with 32%, while OpenAI has 25%. They are projecting $70 billion in annual revenue by 2028 (up from $5 billion this year) with positive cash flow by 2027, years before OpenAI breaks even.

Fortune 500 companies would choose Anthropic’s APIs over self-hosted open source because they don’t want to manage infrastructure. They’re paying for guaranteed uptime, data privacy commitments, and regulatory compliance without needing ML ops teams.

Meanwhile, China’s “AI Tigers”, like Alibaba’s Qwen3, Meituan’s LongCat-Flash, and Zhipu’s GLM-4, are collapsing costs through open source. These state-of-the-art models run at a hundredth the cost of frontier APIs and are already embedded across commerce, logistics, and public infrastructure.

Their bet: intelligence commoditizes, so winning means deployment at scale and ecosystem control, not model ownership. Open-sourcing accelerates adoption and creates network effects that closed models can’t match.

Meanwhile, Google is trying to do it all. In the last month alone, they’ve announced everything from new AI slide makers to moon shot bets like quantum breakthroughs and space data centers. They’re building across consumer, enterprise, infrastructure, and edge simultaneously… the only major player refusing to specialize.

The Open Source Irony

There’s tension in OpenAI’s origin story. Founded as “OpenAI” to democratize intelligence, it became the most closed major lab, now arguing they should have government backing as a matter of “critical national infrastructure.”

The irony: Open source might serve that national interest better.

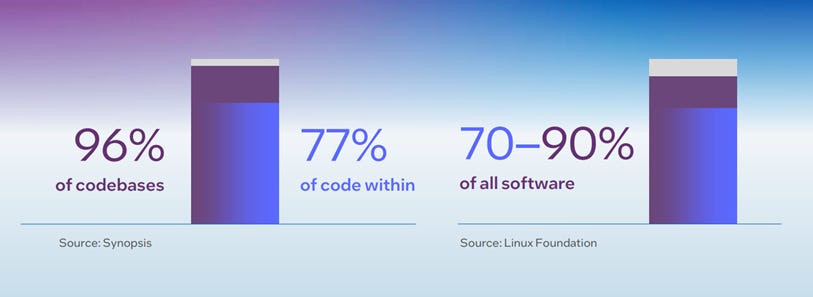

Note: 70-90% of most commercial codebases is built on open source software (yes, even at Big Tech). Open source accelerates innovation, distributes improvement costs, prevents single points of failure. In AI production, it lets companies keep sensitive data internal while still leveraging frontier architectures.

China understood this. By open-sourcing frontier models, they’re building intelligence infrastructure that enables private innovation across companies.

In the next few years, the pattern will be clear: intelligence commoditizes for most AI use-cases in production. Data moats will matter more than model moats.

Operating companies with proprietary data plus open models will build advantages frontier labs can’t replicate through smarter algorithms alone. Frontier labs will continue to do what they do best, chase better and more specialized frontier models for scientific breakthroughs.

OpenAI won consumer mindshare. Anthropic won enterprise margins. China won cost structure. Operating companies are winning integration.

The question in the near future isn’t who builds the smartest model. It’s who builds the most durable business on top of intelligence that’s increasingly free.

The Download — News That Mattered This Week

OpenAI lays groundwork for juggernaut IPO at up to $1 trillion valuation. Meanwhile, ex-OpenAI co-founder Ilya Sutskever disclosed in a court deposition more details surrounding Sam Altman’s Nov. 2023 ousting, including a 52-page document of management issues, a ‘Brockman Memo’, and a discussed Anthropic merger.

Google has a ‘moonshot’ plan for AI data centers in space: Google hopes that by doing so, it can harness solar power around-the-clock. The dream is harnessing a near-unlimited source of clean energy that might allow the company to chase its AI ambitions without the concerns its data centers on Earth have raised when it comes to driving up power plant emissions and utility bills through soaring electricity demand.

Eric-Schmidt-supported Futurehouse launched Kosmos, an AI model that runs intensive scientific studies in 24-48 hours. They’ve used their agents to make a genuine discovery around a new treatment for one kind of blindness (dAMD).

Apple is nearing a deal with Google that would see the iPhone maker pay the tech giant roughly $1 billion a year for a custom version of Google’s Gemini AI model to power its overhaul of Siri, according to a new report from Bloomberg. I wrote about this prediction about 3 months ago - interesting to see it come true!